🄸🅁🅀␀

An Evaluation of Object Name Hashing

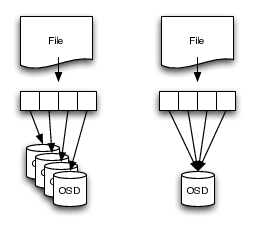

Ceph splits files into objects, which end up distributed across the Ceph cluster. What would happen if the objects ended all up on the same OSD?

I called this modification Striper Prefix Hashing, as I modified the object name hash to only include the prefix added by the Striper. The striper is the piece of code that does the file-to-object splitting (Ceph Dev Doc, Ceph User Doc). Since I was at it I also tested a different hash function.

The analysis uses the most real-world data I was able to find: A 10% sample of a tape-based archive system used by the European Centre for Medium-Range Weather Forecasts (ECMWF). I focused on balance of the resulting cluster (Objects / OSD, Size / OSD), since I could not obtain a Ceph cluster for ~ 1.6 PiB data to do performance testing on.

I did the aforementioned as part of my Master's Thesis. The results are quite interesting: Striper Prefix Hashing doesn't ruin the balance of the cluster.

Update 2016-01-26:

I reran the tests with more hash functions:

Implementation

My changes are available on github: Branch, Diff with current master branch (Old changes, without crypto hashes: Branch, Diff with current master branch)

The changes branch of the Ceph master branch (commit-ish 256dc84) from Jan 14, 2016.

The key change introduced by Striper Prefix Hashing is in the

pg_pool_t:: hash_key method. It is called by

OSDMap::object_locator_to_pg, which converts an object ID (object_t

oid) and object locator (object_locator_t loc, it also contains the pool ID) to a placement group

(pg_t pg).

uint32_t pg_pool_t::hash_key(const string& key,

const string& ns) const

{

string n = make_hash_str(key, ns);

return ceph_str_hash(object_hash, n.c_str(), n.length());

}The changed method, truncates the object's name at the first dot found in

the string inkey, which happens to be the sequence number part for object

names generated by the Ceph Striper.

uint32_t pg_pool_t::hash_key(const string& inkey,

const string& ns) const

{

string key(inkey);

if (flags & FLAG_HASHPSONLYPREFIX) {

string::size_type n = inkey.find(".");

if (n != string::npos) {

key = inkey.substr(0, n) ;

}

}

string n = make_hash_str(key, ns);

return ceph_str_hash(object_hash, n.c_str(), n.length());

}Ceph's Hash Functions

Object name hashes are 32 Bit and computed using a variant of the RJenkins1 hash function. It is not cryptographic and designed for hash tables.

Ceph also contains code of the Linux dcache hash function, which is not used for object names and without changes to the code, not configurable. Its implementation is quite succinct compared to the 57 SLOC of RJenkins:

/*

* linux dcache hash

*/

unsigned ceph_str_hash_linux(const char *str, unsigned length)

{

unsigned long hash = 0;

while (length--) {

unsigned char c = *str++;

hash = (hash + (c << 4) + (c >> 4)) * 11;

}

return hash;

}Since the implementation was already there I included it in the

benchmarks. I plan on re-running the tests with a cryptographic hash function soon.

The new ceph_str_hash functions are wrappers around crypto++'s

functions (See: ceph_hash.cc). Endianness was a bit tricky. I hardcoded

the conversions of crypto++'s byte arrays to unsigned long and put

some sanity checks in the hash simulator. For truncation of SHA-1 and

MD5 I used crypto++'s CalculateTruncatedDigest function.

Methodology

Workload

The workload was taken from the research paper Analysis of the ECMWF Storage Landscape2. The paper discusses two systems; I used the ECFS HPSS trace, which is a tape-based general purpose archive system. It consists of filenames, sizes, access and modification timestamps.

As the trace is quite large with 14.8 PiB and 137 million files, I used a 10% random sample. It is still 1.595 PiB, which would need at least 544 3 TiB hard drives in a pool without replication.

Trace in summary:

- 14950112 files

- 1.595 PiB data in total

- The biggest file is 32 GiB

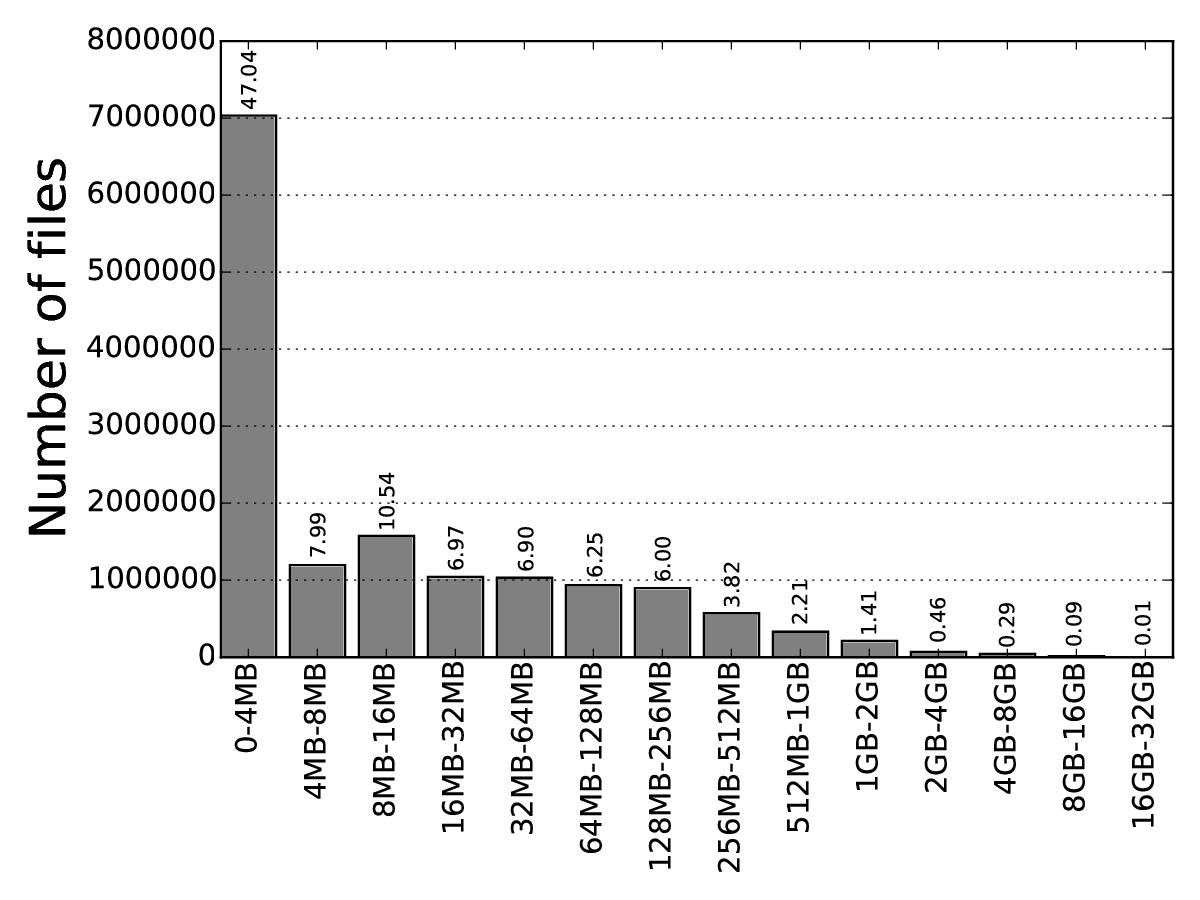

- 47.04 % of all files are smaller or equal to 4 MB (one Ceph object), but in total amount only to 5.495 GiB data (0.34 % total storage)

File size distribution:

Ceph Settings

600 OSDs with 38400 placement groups. Flat OSD layout without failure domains. Simulation ran 4 times, once per setting.

Simulator

I found that running a whole Ceph stack with Ceph FS to simulate ~ 1.6 PiB is far too slow. I tried it anyway, and wrote a FUSE blackhole filesystem to store ~ 1.6 PiB without actually storing it. It's an overlay filesystem that keeps everything (file sizes, xattrs, metadata) except the actual data. Still to slow.

Simulation and lots of CPU cores to the rescue… I could borrow a 32 Core Xeon machine during my research back then, with a little help of some UNIX tools and scripting, each run took 45 minutes.

45 minutes for for ~ 1.6 PiB (600 OSDs), the 10% sample. I tried the full trace with 6000 OSDs, but quickly decided against it after the first run tool over 3 days.

The simulation code is in the branch on github. It compiles as part of the Ceph sources. It expects input on stdin with filename and object size separated by spaces. For each line of input it computes the placement data and outputs the following:

- Filename

- Size

- Number of striper extents

- Number of object names generated by the striper

- Number of unique placement groups assigned to the file

- Number of OSDs assigned to the file

- Number of unique primary OSDs assigned to the file

JSON encoded data:

- List of Object IDs

- List of Placement Groups

- List of OSDs

Results

This is a compilation of the IMHO most interesting results.

Distinct OSDs per File

The Table shows statistics for the number of distinct primary OSDs of all files in the workload that are larger than 4 MiB, because the number of OSDs per file is always 1 for files with only one object. Striper Prefix Hashing works :)

| Statistic | RJenkins | Linux dcache | RJenkins+Prefix | Linux+Prefix |

| Min. | 1 | 1 | 1 | 1 |

| Q1 | 3 | 3 | 1 | 1 |

| Median | 9 | 9 | 1 | 1 |

| Q3 | 35 | 35 | 1 | 1 |

| Max. | 600 | 600 | 1 | 1 |

Balance

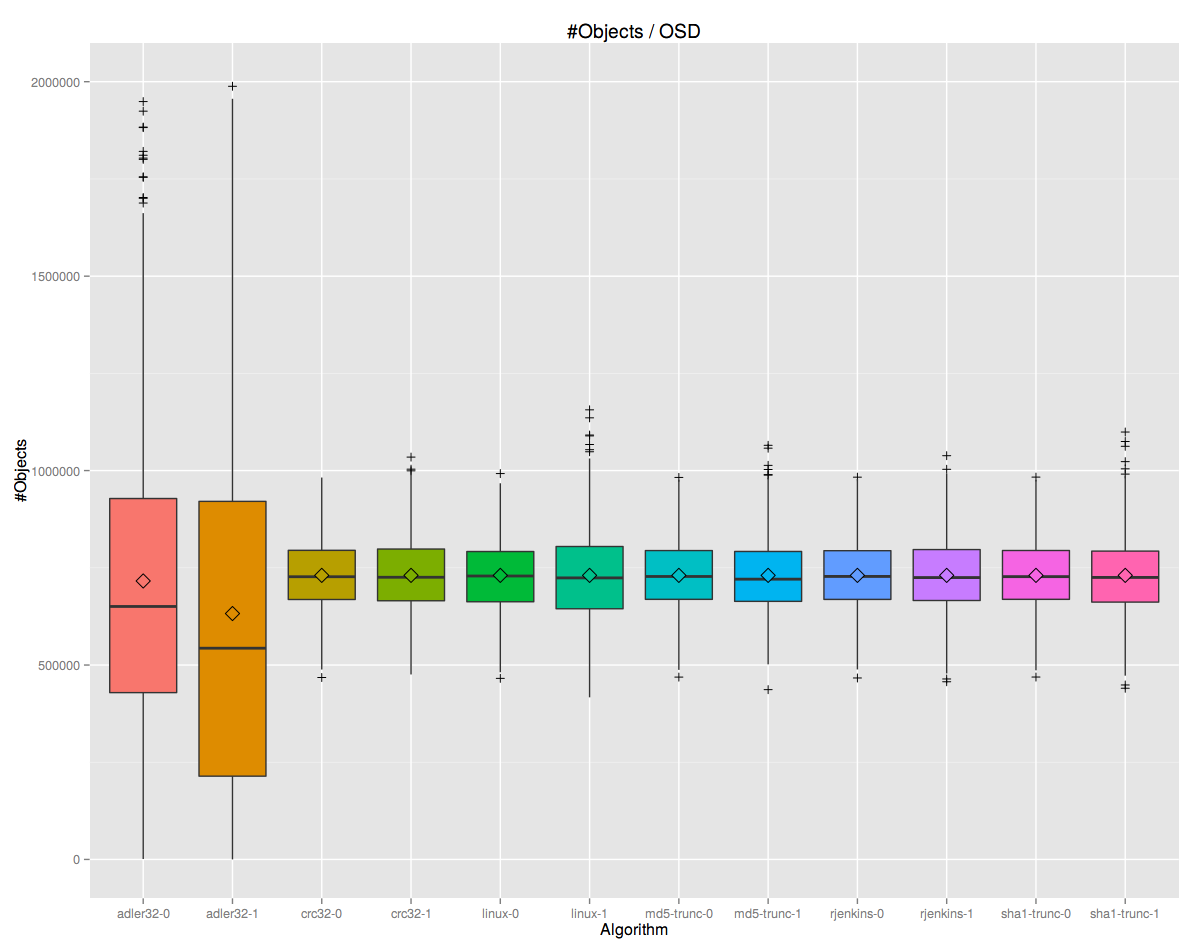

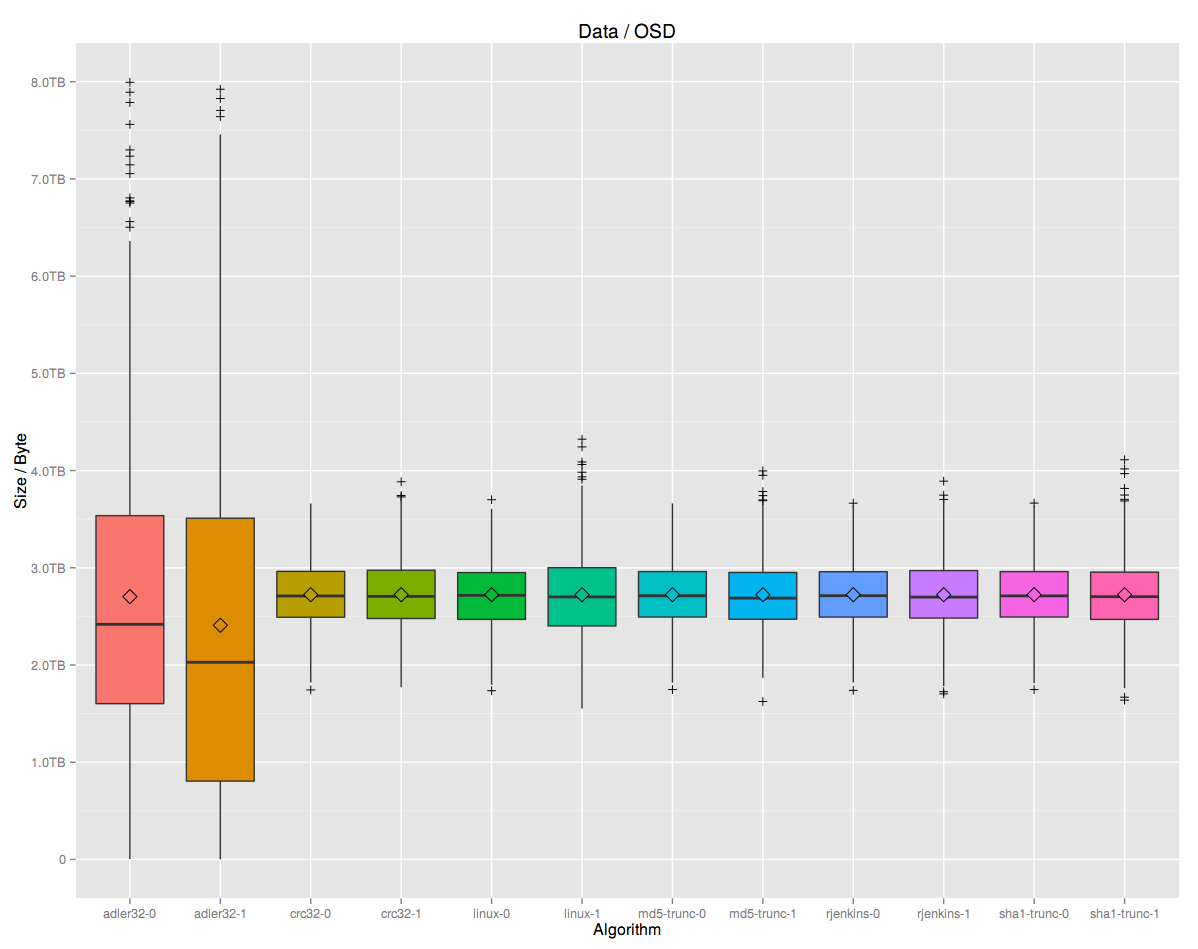

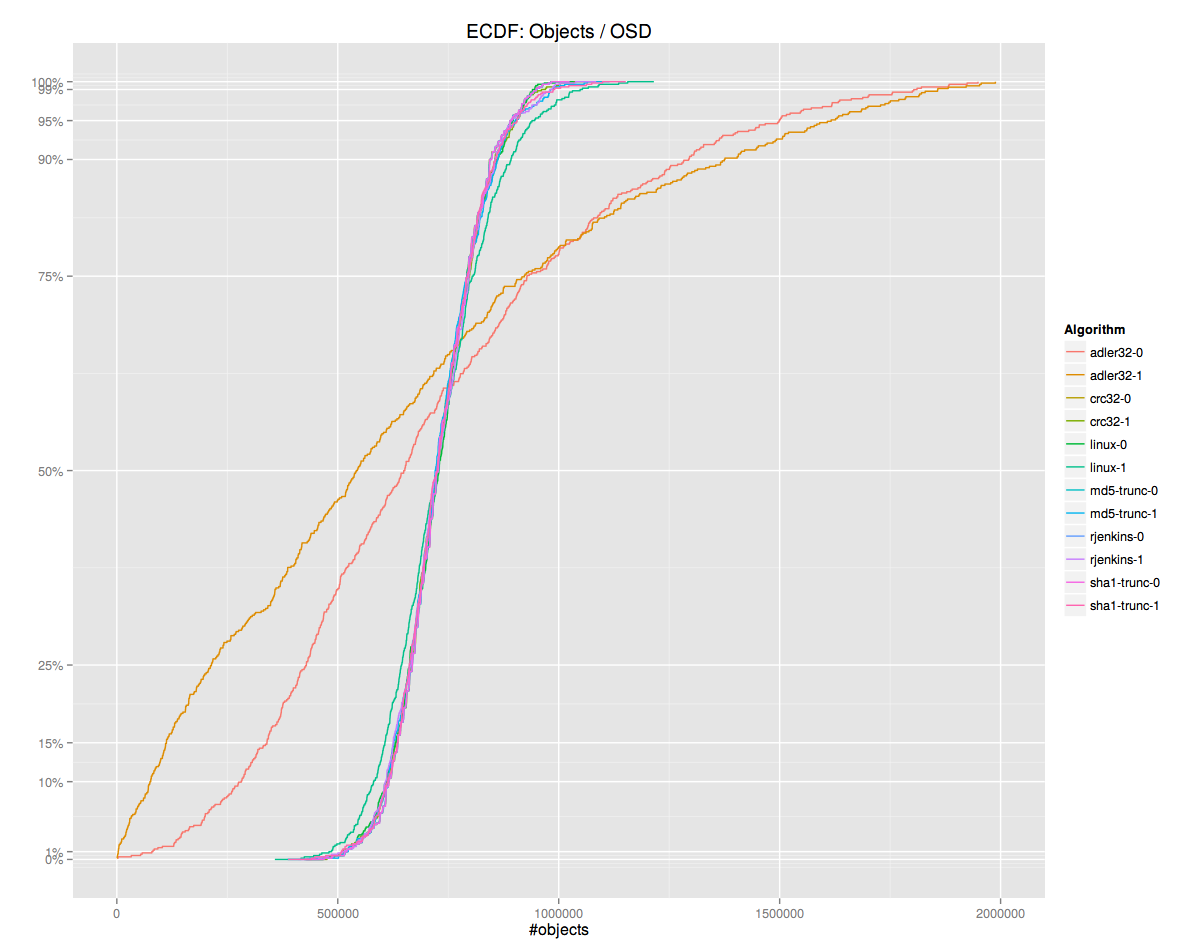

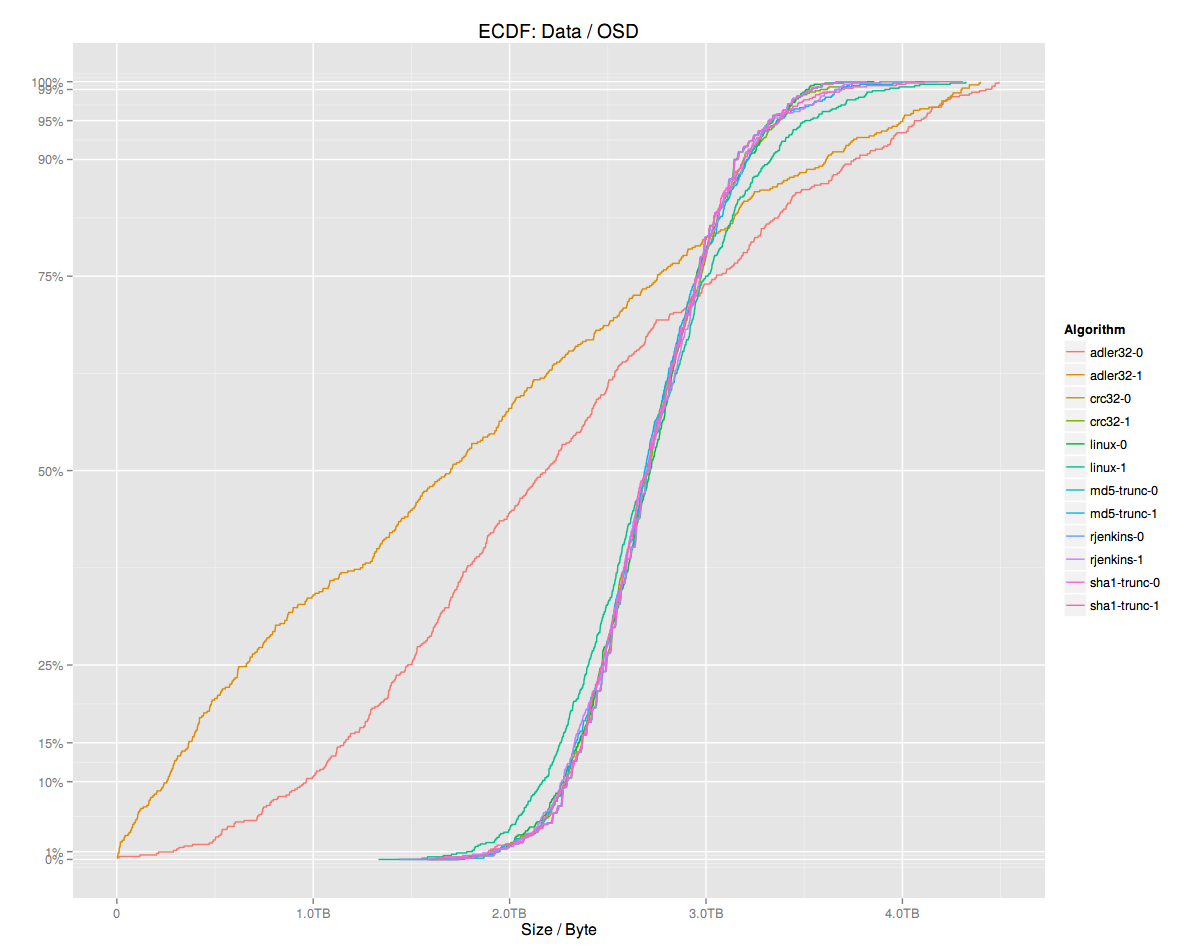

In an ideal balanced Ceph cluster all OSDs store the same amount of data and objects. The next figures show the measured balance for Objects / OSD and Size / OSD .

Note that the graphs are cut off at 8T / 2000000 objects. Outliers of Adler-32 go beyond that.

Adler-32 is obviously a bad choice. For standard hashing all choices (except Adler-32) work. CRC32, RJenkins, MD5 and SHA-1 are close. Linux dcache is slightly inferior.

With Striper Prefix Hashing more differences show: Linux becomes a too weak. RJenkins and CRC32 work especially well, with CRC32 even slightly better.

The following table compares the 99th percentiles with and without Striper Prefix Hashing enabled. The values give an estimate on how much more space the OSDs need for Striper Prefix Hashing to work. For CRC32 and RJenkins it is below 250GB.

| Adler-32 | CRC32 | Linux dcache | MD5 truncated | RJenkins | SHA-1 truncated | |

|---|---|---|---|---|---|---|

| 90% | 1.0TB | 46.7GB | 121.8GB | 63.3GB | 47.9GB | 45.2GB |

| 99% | 3.9TB | 48.9GB | 411.4GB | 125.7GB | 121.8GB | 153.7GB |

| 99.9% | 7.4TB | 156.7GB | 646.7GB | 328.4GB | 153.6GB | 415.2GB |

| 99.99% | 8.4TB | 221.3GB | 638.8GB | 340.7GB | 223.5GB | 452.9GB |

| 99.999% | 8.4TB | 227.8GB | 638.0GB | 341.9GB | 230.5GB | 456.6GB |

| Max | 8.5TB | 228.5GB | 638.0GB | 342.0GB | 231.2GB | 457.0GB |

Discussion / Conclusion

First of all: It works. With Striper Prefix Hashing all objects of a file end up on the same OSD.

Secondly: It does not cause dramatic imbalance for a real-world workload.

What is it good for?

Locality: Small and medium sized files might work better, as the OSDs could prefetch objects belonging to the same file.

What's missing?

Performance Implications: The results do not show the performance implications of storing all objects of a file on a single OSD. All objects of the same file on the same OSD could also improve performance by exploiting locality. But, so far, Ceph OSDs are not optimized for this use case.

In case a storage pool only consists of large files like disk images this locality might not be beneficial.